Introduction: The Global Need for AI Governance Alignment

AI is changing the world, but innovation can rapidly surpass safety, ethics, and accountability without explicit safeguards. This is why international regulators and standard-setting organizations are working hard to standardize the management of Artificial Intelligence (AI) systems.

This is where three important frameworks come into play: the EU AI Act, which is Europe’s first AI law; the NIST AI Risk Management Framework, based in the United States; and ISO/IEC 42010, the world’s first AI Management System Standard.

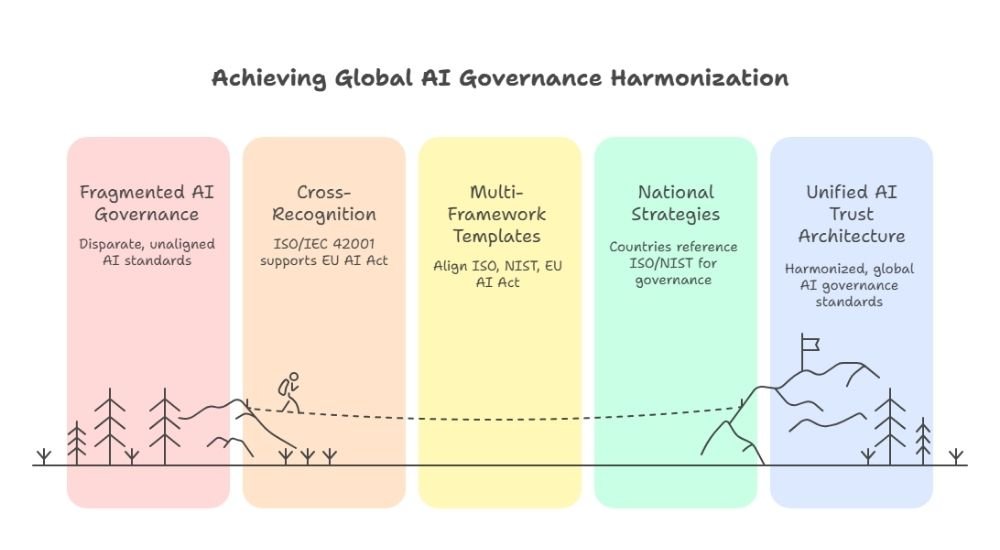

However, since each framework uses distinct terminology and evaluation standards, many organizations experience compliance fatigue. Without such frameworks, businesses must manage competing governance regulations, conduct redundant audits, and maintain several reporting systems.

When ISO, NIST, and the EU AI Act are combined, they provide what many experts refer to as the “triad of trust,” a worldwide framework for creating AI that is secure, open, and compatible with the law.

Here, we will take a look at how enterprises may create a single, integrated AI governance plan by examining how the ISO/IEC 42001 AI Management System (AIMS) complies with the EU AI Act and the NIST AI RMF.

(For a deeper overview of ISO/IEC 42001 certifications and training, see our Master Guide on AI Governance Standards.)

Overview of ISO/IEC 42001, NIST AI RMF, and the EU AI Act

Before investigating alignment, it is very important to understand the intricacies of these three frameworks.

AI Management System (AIMS) ISO/IEC 42001

ISO/IEC 42001, which was published in 2023, offers a management system standard for AI that is comparable to ISO 27001 for information security. The main aim of this framework is to assist companies in creating organized procedures for risk, accountability, transparency, and AI ethics. The foundation of an AI Governance Standard comprises standards for leadership, planning, operation, performance review, and ongoing improvement.

Framework for NIST AI Risk Management (AI RMF)

The NIST AI RMF is a voluntary guideline that was created by the National Institute of Standards and Technology in the United States and focuses on risk identification, measurement, and mitigation. It helps businesses assess the advantages and disadvantages of AI systems over their lifecycle and is broken down into four main functions:

- To Govern,

- To Map,

- To Measure, and

- To Manage.

The EU AI Act

The first comprehensive legal framework for AI in the world was approved in 2024 with the EU AI Act. It divides AI systems into four danger categories: minimal, limited, high, and unacceptable. Strict guidelines for data governance, human oversight, risk management, and post-market monitoring are required for high-risk AI systems.

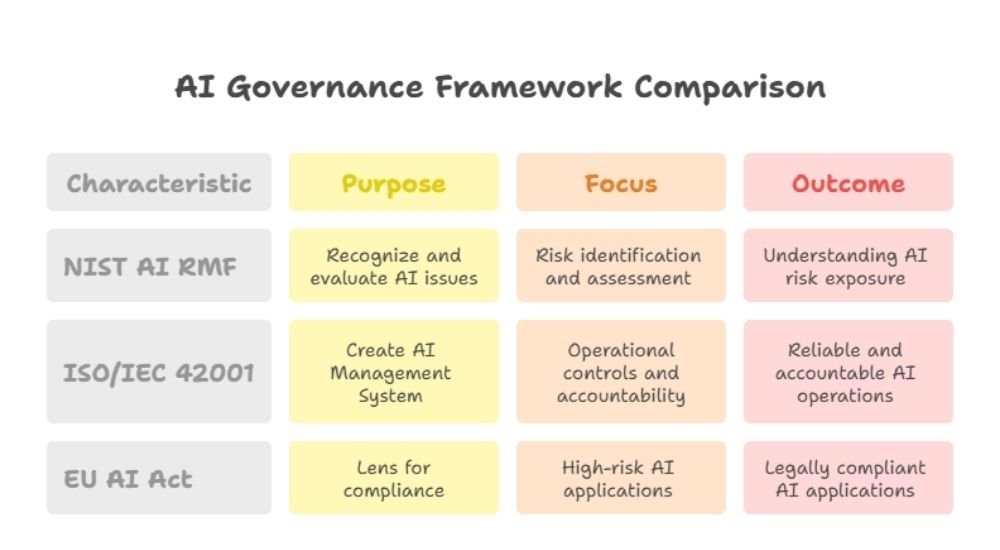

These three approaches work together to address various levels of AI accountability:

- NIST prioritizes performance and risk.

- ISO/IEC 42001 is all about governance and organizational procedures.

- The EU AI Act prioritizes adherence to and enforcement of the law.

How ISO/IEC 42001 Complements NIST AI RMF

Building trustworthy AI is the shared objective of NIST AI RMF and ISO/IEC 42001 AI Management System. However, they do have different approaches. While the NIST concentrates on risk performance, ISO concentrates on management procedures.

Here’s a look at both these frameworks and how they function compared to each other:

- ISO Clause 5: Leadership = NIST “Govern” Role

This specifically states that the top management must clearly define AI governance roles, duties, and policies in accordance with ISO/IEC 42001. As a result, it directly supports NIST’s “Govern” role, which places a strong emphasis on ethical monitoring, documented risk ownership, and accountability frameworks.

- ISO Clause 6: Planning = NIST “Map” Function

Under this, organizations must determine both internal and external factors that affect AI risk. While NIST’s “Map” function entails determining AI system context, intended use, and stakeholder impact, ISO Clause 6.1 requires risk assessment and treatment procedures.

- ISO Clause 8: Operational Planning & Control + NIST “Measure” and “Manage” Functions

While the NIST encourages ongoing monitoring and assessment of AI hazards, ISO/IEC 42001 guarantees operational control through AI lifecycle management.

Basically, the “how” includes governance procedures of ISO 42001, while the “what”, which includes risk metrics and measurements, is NIST AI RMF.

Organizations can create quantifiable AI risk controls that are not only recorded (ISO-style) but also measured and tracked (NIST-style) by combining the two.

(For a detailed guide on AI Risk Management under ISO standards, see this article on ISO/IEC 23894 and 42001 alignment.)

How ISO/IEC 42001 Aligns with the EU AI Act

The EU AI Act establishes mandatory legislative standards, whereas ISO/IEC 42001 offers a voluntary standard. The good news is that, particularly for companies using high-risk AI systems, the two are tightly related.

Here is a detailed look at how ISO/IEC 42001 aligns with the EU AI Act

- Article 9 of the EU AI Act and ISO Clause 6: Planning

Establishing a defined AI risk management approach is necessary for both. Article 9 makes it legally required for high-risk AI, while ISO 42001 establishes the procedure criteria.

- Articles 13–15 of the EU AI Act and ISO Clause 7: Support

In line with the openness, recordkeeping, and data governance principles of the EU AI Act, ISO places a strong emphasis on appropriate documentation, resource allocation, and data quality control.

- Articles 61–63 and ISO Clause 9: Performance Evaluation

To guarantee continuous development, ISO mandates management evaluations and internal audits. This is in line with the requirement of the EU AI Act to monitor and report system performance after deployment.

As a result, companies that use the ISO/IEC 42001 AI Management System (AIMS) can show that they are prepared for EU AI Act audits by demonstrating that they have ethical AI governance procedures integrated into their regular business operations.

Comparative Matrix: ISO 42001 vs NIST AI RMF vs EU AI Act

Here’s a look at a more compact comparison between the three frameworks:

Goal:

- Organizations can operationalize AI governance by using the management system standard provided by ISO/IEC 42001.

- A risk-based paradigm for evaluating AI accountability, security, and dependability is offered by NIST AI RMF

- Legally enforceable guidelines for AI development, application, and market access in the EU are enforced by the EU AI Act.

Range:

- Organizations of all sizes creating or utilizing AI systems are subject to ISO 42001.

- The U.S. public and business sectors mostly use the optional NIST AI RMF framework.

- Any AI system that affects EU residents is subject to the EU AI Act, regardless of the company’s location.

Focus:

- ISO 42001: Ethics, leadership, compliance, and governance of the AI lifecycle.

- NIST AI RMF: Risk assessment, openness, and ongoing observation.

- EU AI Act: Conformity evaluations, illegal activities, and legal responsibility.

The EU AI Act establishes the legal boundaries, ISO specifies the governance structure, and NIST provides the risk terminology. When together, they create an entire ecosystem for ethical AI.

Building an Integrated AI Governance Strategy

Organizations can use a three-step integration pathway to put into practice a global AI governance plan that takes into account all three frameworks:

To learn how to implement this strategy in your organization, explore the ISO/IEC 42001 Lead Implementer training at GAICC, which is designed to help professionals integrate these global frameworks effectively.

Global Industry Impact and Future Outlook

According to a McKinsey report, AI is helping around 64 percent organizations to enable their innovation. This is what has made the norm of AI regulation common across the globe.

Here are some key trends shaping the future of AI governance:

The long-term objective is a world with transparent accountability models, common standards, and cross-border AI compliance.

How GAICC Helps

The Global AI Certification Council (GAICC) is a recognized training and certification body for ISO/IEC 42001.

Professionals and companies can benefit from GAICC by:

- Understanding the ISO 42001 AI Standard and how it relates to NIST and the EU AI Act.\

- Helping construct, examine, and validate Artificial Intelligence Management Systems (AIMS).

- Incorporating moral AI governance procedures into all projects, divisions, and nations.

- Obtaining internationally recognized credentials like ISO/IEC 42001 Lead Implementer, Lead Auditor, and Internal Auditor.

- Helping through case studies on NIST AI RMF integration and EU AI Act alignment, which are part of their Senior Lead Implementer and Senior Lead Auditor curricula, you can obtain real-world experience.

GAICC’s programs provide organized learning and practical implementation advice for companies getting ready for ISO 42001 Certification or impending AI Act audits.

Final Takeaway on Global AI Governance Alignment

The EU AI Act, NIST AI RMF, and ISO/IEC 42001 are driving the convergence of the global AI environment. The foundation of AI governance includes:

- ISO/IEC 42001, which guarantees uniform procedures and accountability.

- NIST AI RMF, which helps measure control logic and risk maturity.

- Public trust and legal enforceability are provided by the EU AI Act.

When combined, they produce a global model for responsible AI governance that strikes a balance between innovation, safety, ethics, and compliance.

The GAICC ISO/IEC 42001 training programs provide the ideal basis for individuals and businesses prepared to advance in this dynamic compliance environment.