AI risk management is essential to ethical AI since organizations are required to identify and manage the risks associated with AI systems as they develop and implement them. An AI system may produce prejudice, undermine trust, create safety or security problems, or violate regulations in the absence of a strong risk management strategy.

This is where the ISO/IEC 23894 standard comes into play and provides a focused approach to handling these issues unique to AI. Basically, ISO 42001 provides the what and where for creating the governance, procedures, and controls surrounding AI risk management, while 23894 provides the how. If organizations are looking to adopt ISO 42001, an understanding of ISO 23894 principles is a must to help them declare their AIMS to be effective. Here is where a good certification guide can come into the picture to help you through the process as well.

Understanding ISO/IEC 23894

Types of AI Risks

AI systems present a range of risks. Here is a list of some of the most common ones:

- Prejudice and risks to fairness: AI models may discriminate unfairly and incorporate bias.

- Explainability and transparency risks: If there is a lack of insight into the reasoning behind certain decisions or answers, it might erode confidence or compliance with regulations.

- Safety and security risks: There are chances of AI systems behaving strangely or even being open to hostile attacks.

- Privacy and data quality risks: The results that are obtained from AI could be jeopardized by subpar data or insufficient data governance.

- Operational and lifetime risks: Problems with deployment, monitoring, and maintenance can cause AI systems to malfunction or perform worse.

Risk Sources and Stakeholders

AI risks come from a variety of sources. This could include:

- Data sources have incomplete, biased, and out-of-date data.

- Model construction and training, which includes algorithm selection, artifact bias, etc.\

- Context of deployment, like the use case, user interaction, hostile environment, etc.

Some of the stakeholders in this aspect usually include developers, operators, end-users, regulators, and the general public.

Risk Lifecycle

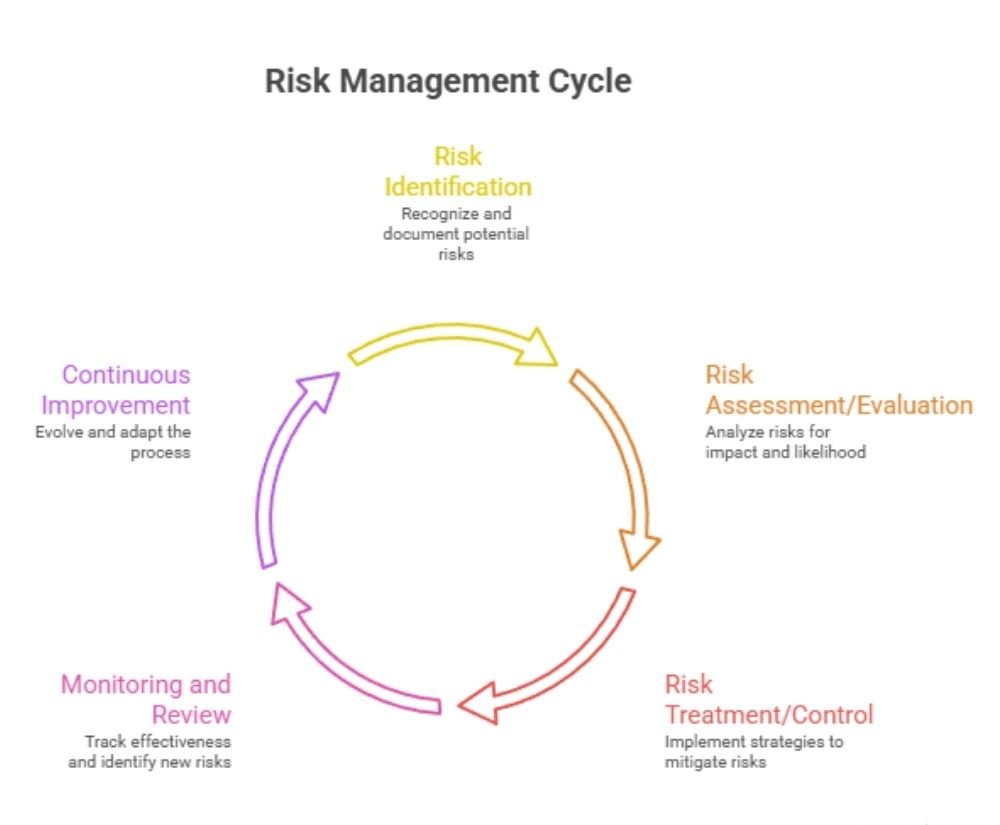

Working through the following lifecycle is necessary to manage AI risks:

This lifespan is specifically incorporated into the guidelines of ISO/IEC 23894. (vde-verlag.de)

The Connection Between ISO 23894 and ISO 42001

Mapping Risk Controls into ISO 42001 Clauses

Organizations must demonstrate that they have controls in place to limit AI risk when putting ISO 42001 into practice. This is where the lead implementer certification guide can help set the process in motion.

Several ISO 42001 provisions, like risk assessment, monitoring, and improvement, are satisfied by the controls and procedures provided by ISO 23894.

For instance, ISO 42001 may contain a provision that talks about risk and opportunity management for AI. Lead the ISO 23894 guidelines, which include bias detection, explainability tests, and data quality assurance, to help you clarify what that means for AI.

- You’ll require proof of risk-treatment measures when auditing for ISO 42001 certification, and ISO 23894 provides the techniques for them.

- The relationship between AIMS and AI Governance and Compliance can be better understood by considering ISO 23894 as a risk management engine within the ISO 42001 “management system.”

Building an AI Risk Management Framework

According to a survey by McKinsey, inaccuracy is one of two risks that most organizations are working to mitigate. This is why it becomes important to have a good AI Risk Management Framework in place. Here is a step-by-step breakdown of how to build an AI risk management framework

Step 1: Establish the Context and Scope of AI

- Determine which AI systems or parts fall under your AIMS’s purview.

- Describe the mission, stakeholders, and legal and regulatory environment of the organization.

- Set risk criteria, such as the acceptable level of residual risk and the main goals.

Step 2: Determine and Categorize Hazards

- Determine where your AI system might malfunction or cause harm, using the lifecycle and types of potential risks.

- Then sort hazards according to their impact on stakeholders, likelihood, and severity.

- Document everything from stakeholders, impacted assets, and sources.

Step 3: Evaluate and Set Priorities

- Evaluate each risk that has been identified in terms of its likelihood of happening, the severity of its effects, and its controllability.

- Determine which risks should be treated first based on the assessment’s findings and the organization’s risk tolerance.

- For clarity, use dashboards, heat maps, or risk assessment tables.

Step 4: Put Technical, Procedural, and Ethical Controls in Place

- Remember that technical controls include adversarial testing, interpretability/explainability characteristics, human-in-the-loop supervision, and model validation.

- Procedural controls include governing committees, review boards, and established monitoring and incident response procedures.

- Fairness and bias checks, accountability frameworks, transparency regulations, and stakeholder involvement are examples of ethical controls. These controls are mapped into your ISO 42001 AIMS after being based on ISO 23894’s guidelines.

Step 5: Track, Examine, and Enhance

- Constantly monitor system performance, drift, new threats, and user complaints.

- Conduct an audit internally or externally of your risk controls and AIMS to confirm their continued efficacy.

- Revise your risk management in light of discoveries, lessons discovered, and contextual shifts, and make changes if and when needed.

Risk Treatment Techniques for AI Systems

Here are some essential methods you ought to think about:

- Critical choices are reviewed by humans thanks to human-in-the-loop oversight and escalation systems.

- Tools for model validation and interpretability: test models, verify results, and guarantee explainability.

- Maintain proper data hygiene, look for bias, and make sure the data is representative.

- Bias identification and fairness testing: measure fairness, examine the effects on subgroups, and correct unfavorable results.

- Tests for explainability and transparency should include documentation, decision logs, data traceability, and model versions.

- Continuous feedback loops and incident reporting: establish systems for recording, evaluating, and reacting to AI system failures, near-misses, and incidents.

These methods, which are based on ISO 23894 guidelines, collectively constitute the “treatment” side of risk management.

Integrating Risk Management into ISO 42001 Certification

During Implementation

- Include your monitoring dashboards, risk treatment plans, and risk records in the AIMS paperwork that ISO 42001 requires.

- Make sure that your AIMS’s roles, duties, management review, and performance metrics are properly related to risk management initiatives.

During Audit

- Demonstrate that you followed ISO 23894’s risk-management approach

- Display concrete metrics, such as the quantity of hazards found, the number of controls put in place, the effectiveness of those controls, incidents found, and lessons learned.

- This is the work for a lead auditor, who, after having completed a lead auditor certification, helps organizations in this aspect.

During Continuous Improvement

- Utilize risk analytics dashboards to inform AIMS management review meetings.

- As the AI environment evolves, update your risk criteria, controls, and monitoring, keeping in mind new laws, new threats, new use cases, etc.

- The core of ISO 42001 is the continuous improvement cycle and risk management, which guarantees that you stay cognizant of changing hazards and adjust appropriately.

Tools and Frameworks That Complement ISO 23894

To strengthen your global compliance readiness, consider integrating these frameworks alongside ISO 23894:

NIST AI RMF (U.S.)

NIST AI Risk Management Framework provides a risk-management roadmap for AI in the US context, which can map to ISO 23894’s processes.

EU AI Act risk tiers

The EU Artificial Intelligence Act introduces risk-tiered regulation for AI systems in Europe (high-risk, limited-risk, minimal-risk) — aligning your risk management under ISO 23894 helps you prepare for this.

OECD AI Principles

The Organisation for Economic Co‑operation and Development (OECD) AI Principles emphasise transparency, fairness, accountability, and robustness — many risks treated under ISO 23894 link directly to these principles.

ISO/IEC 22989 – Conceptual definitions

ISO/IEC 22989 provides foundational definitions for AI systems and terminology, which support consistent risk identification and classification in ISO 23894.

By aligning with multiple frameworks, your risk-management foundation becomes robust, resilient, and globally oriented.

Benefits of Strong AI Risk Management

There are numerous advantages to putting in place a strong AI risk-management program under ISO 23894. Here is a look at some of them:

- Minimizes AI incidents and reputational damage by preventing significant failures through early risk awareness and control.

- Increases stakeholder and regulatory trust as the clear, documented risk management fosters trust among partners, customers, and regulators.

- Accelerates ISO 42001 compliance readiness. AIMS certification goes more smoothly when risk processes are developed.

- Creates governance for accountability, transparency, fairness, and safety while supporting ethical, explicable, and auditable AI activities.

- Innovation within bounds can happen by deliberate risk management, which allows you to create more freely without worrying about regulatory repercussions or compliance.

Common Mistakes to Avoid

When managing AI risk and attempting to implement ISO 42001/23894, organizations may encounter certain pitfalls. Some of them are listed below.

- It is important to view risk management as a checklist event and not as a continuous lifecycle process.

- Ignoring AI system post-deployment monitoring, which could lead to it drifting, being misused, or being affected by environmental changes.

- Forgetting that risk is not only technical; it also involves cross-functional teams like governance, legal, ethical, data science, etc.

- Neglecting social and ethical issues like bias, fairness, stakeholder impact, etc, and concentrating primarily on technical ones, like model correctness, security, etc.

- Thinking that just adopting ISO 42001 will be enough, leading to overlooking important gaps if you don’t have the extensive risk-control layer of ISO 23894.

By avoiding these errors, you can make sure that your AIMS is reliable and efficient.

How GAICC Helps

The Global AI Certification Council (GAICC) offers material, guidelines, and training to help you align your AI governance and compliance approach with ISO 23894 and ISO 42001. The Lead Implementer Certification Guide and the Lead Auditor Certification Guide provide comprehensive certification courses and best-practice recommendations. Your path to complete compliance and strong AI governance is aided by these interconnected resources.

FAQs

- AI risk identification, assessment, treatment, and monitoring are the focus of ISO/IEC 23894, a set of guidelines on risk management for AI systems.

- An AI management system (AIMS) must be established, implemented, maintained, and continuously improved, according to ISO/IEC 42001, a management system standard for AI.

Although it is strongly advised, ISO 23894 is not legally required for ISO 42001 certification. Your AIMS under ISO 42001 may not demonstrate adequate risk controls without the risk-management basis of ISO 23894, which could hinder certification preparedness.

Organisations measure AI risk by defining risk criteria (e.g., likelihood × impact), using dashboards or heat maps, tracking metrics such as the number of incidents, bias-test failures, model drift rates, number of control failures, and stakeholder complaints. ISO 23894 emphasises documentation, monitoring, and review.

Tools vary but usually include:

- Frameworks for model validation and interpretability toolkits

- Software for testing bias and fairness

- Dashboards with high-quality data

- AI-specific risk-registering systems

- Systems for tracking anomalies, drift, and user complaints

Final Thoughts

Organizations cannot afford to approach AI governance and risk management as an afterthought in the current era of rapid AI adoption. The combination of ISO/IEC 42001 (AI management system standard) and ISO/IEC 23894 (risk management guidance) provides a strong, methodical approach to creating an accountable, reliable, and auditable AI operation.

You may make sure that risk is not only managed but also integrated, monitored, enhanced, and in line with AI governance and compliance by considering 23894 as the foundation of your AIMS under 42001. You can create AI systems that are both creative and responsible if you embrace the process, involve your teams, and set up your framework.